# Leaderboard

This page gives an overview of the models you have created for this model. It allows you to compare, explore and publish your models. It can be accessed by selecting Leaderboard from the navigation sidebar as seen below.

# Publish Best Model Candidate

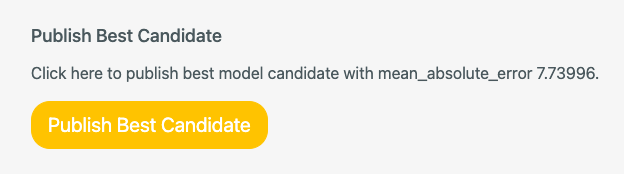

Once you have your first model when you go to the model leaderboard page you will see a button at the top that looks like this:

This button will be visible as long as your currently published model in your integration environment has a worse score than the best model candidate available.

Clicking on this button will publish the best model candidate currently on the leaderboard into your integration environment.

TIP

This is a quick and easy way to see if you have a new best model candidate that might be worth trying out.

# Live Model

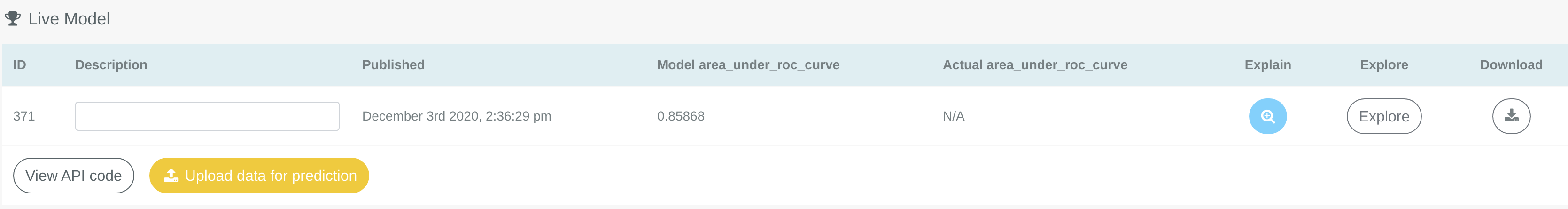

Once you have published a model candidate, the following table will be shown at the top of the leaderboard page:

This table shows you the model that is currently in your integration environment.

As this model is published you have a few additional options for this specific model.

Explain - This will take you to the explain page so that you can look at things like feature importances or row prediction explanations for this model.

View API code - This will take you to the predict API documentation and playground for this model, allowing you to test out specific predictions against the model and also providing Python and R code snippets to call predictions against the model. On this page you will also find an example function for calling predictions directly from Google Sheets.

Upload data for prediction - This will allow you to upload a CSV for predictions from this model, once uploaded your model will create predictions and provide a CSV file containing the outcomes.

# Model Candidates

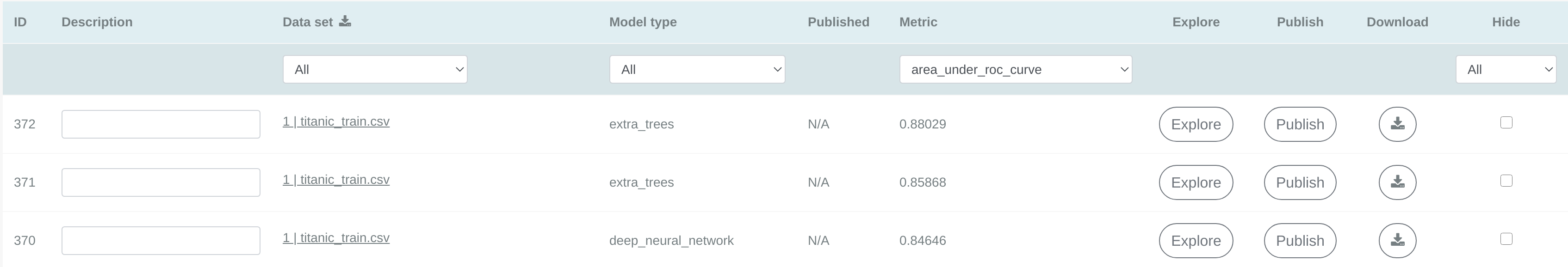

This page contains a list of your best model candidates created on this model. You will be able to see them in a table like the one shown in the picture below.

Each row in this table will contain the following columns:

ID - Each model candidate is given a unique ID, this can then be seen across the platform when that model is being referenced.

Description - As IDs are a bit difficult to keep track of we also provide a description field which can be filled in with useful information about a model. For example, you could store the external business case outcome that this model generated.

Data set - This references which dataset this model was trained with. To download a copy of that dataset you can click on the link in this column. As datasets can contain the same name, the number at the start of the dataset name can be used to distinguish between them.

Model type - This is the model type for this model. The possible model types can be seen here.

Published - This indicates when this model was published to the integration environment.

Metric - This number is the score that this model achieves for the selected metric. This metric will default to the one selected during the last train that has occurred on this model.

Explore - This button will take you to the explore section, which shows the code and various scores and charts for any specific model.

Publish - This button will publish this specific model, it can be used instead of the "Publish Best Model" button if you want to test a model with a lower score.

Download - This button will allow you to download the model. It will create a zip folder containing Docker images, Docker Compose configurations and instructions required to run this model in your own environment.

Hide - This allows you to toggle which models should be shown both on the leaderboard and as best model for this model, this can be useful if you have accidentally uploaded and trained models with a dataset that leaks the target.

# Filtering

You can filter the model leaderboard based on each column. We store the top 250 model candidates that have been trained in a model.

TIP

If you want to see the best linear model but the top 50 are all xgboost then you could choose to filter model types by linear model to show linear models, only model types that have been trained for this model will be selectable.

# Clearing unpublished candidates

At the top of the model candidates leaderboard you will see a clear unpublished candidates button, this can be used to remove all unpublished model candidates from the leaderboard.

This can be useful if you have done some feature creation steps on your dataset and want to view the model candidates created from your new train without the leaderboard including all the candidates you didn't choose to publish previously.

TIP

If you accidentally train a lot of model candidates with a dataset that leaks the target variable you may want to clear unpublished candidates to remove these flawed models.

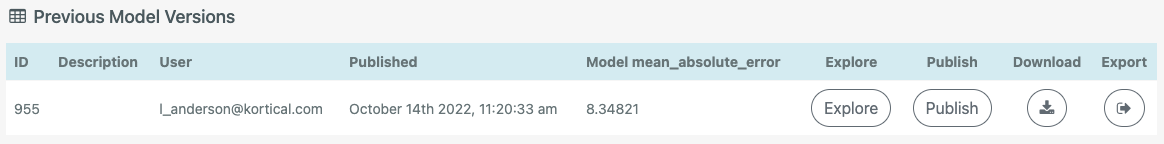

# Previous Model Versions

Below the model candidates table you will find another table called Previous Model Versions. This table provides a history of which models have been published into your integration environment, on which dates and by whom.

This is useful to keep track of which models were in use at what time.