By: Andy Gray - CEO, Kortical

OpenAI just dropped a video about all of the wonderful jobs AI is going to create.

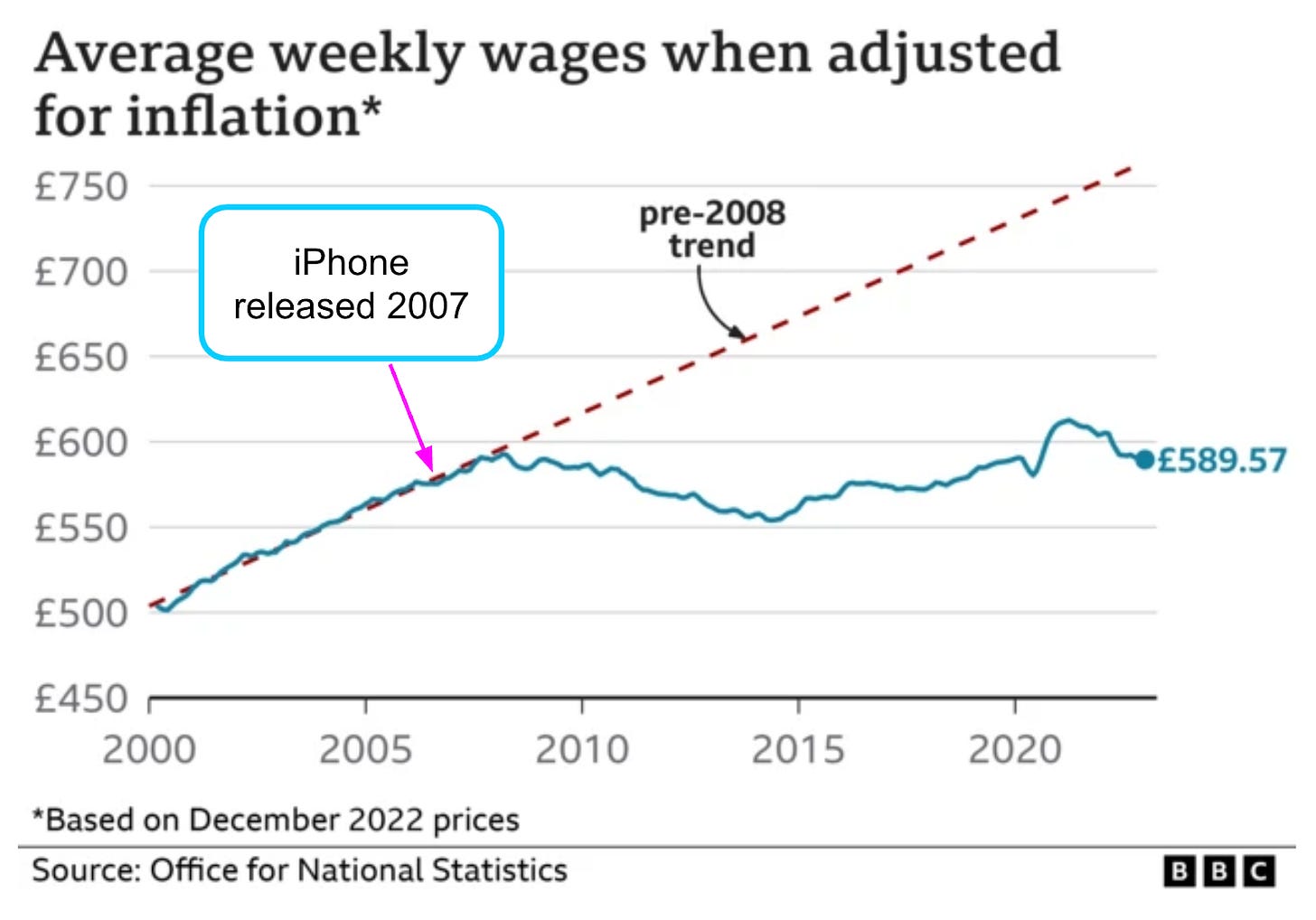

This feels very disingenuous, as there are already strong economic signals that we're in for a bumpy ride. Since the advent of ubiquitous internet, wages have already slid 30%.

I am as ebullient as anyone at the idea of never having to do another load of laundry or wash another pot but as AI is coming in, it's massively disrupting the world of work and crucially, how people make a living.

I wrote this article, backed by a lot of data, that shows how we can determine AI is not destined to create better jobs short term. It makes the economic case that technology has actually been making jobs worse for 20 years. It shows how the Jobpocalypse could happen faster than we might think but also shows that there is a brighter future with AI and how we can get there.

This is probably a weird article for an AI company founder to be putting out there, but politicians seem to be debating the window dressing, while they lose the shop. As such, I think it's important to start the conversation.

What do you think, is technology and AI making jobs better or worse? How do we make sure that everyone benefits and people don't get lost in the transition?

Exposing the Big Tech–AI lie

The uncomfortable truth about AI is that it is already putting people out of work, and this trend will only increase. We are woefully unprepared to deal with this fact.

As an AI start-up founder and someone who inhabits the world of online AI discourse, I generally see two camps. The first includes the AI positivists, largely recently converted crypto-bros and AI hypemen who try to convince me that every new incremental model release is a game-changer destined to revolutionize work forever, leading us inevitably toward a glorious AI utopia. The other camp is the AI skeptics who are trying to convince everyone that LLMs merely seem capable and will not amount to much at all.

I’m in a third camp: the tech is almost ready but we as a society are not. Here, I expose the lie that tech revolutions always create better jobs and provide a unique take that is the most rational explanation for the last 20 years of wage stagnation. I'll lay out the evidence for how AI could, in the worst case, make 10-20% of workers worldwide effectively redundant in 6 months time. Yes, I know extraordinary claims require extraordinary proof but I’ve come with receipts.

I'll also explain why we can't halt AI development despite the existential risks, and why our only path to a positive outcome requires urgent collective action.

Exposing the lie

The standard mantra is `new technology always creates new and better jobs` but is that a universal truth? Or is it akin to a cosy log fire: for the first few logs you add, it gets warmer and cosier but if you keep adding them, there's a tipping point where you burn down the house.

With steam and electricity we automated heat and motion. With the internet we automated physical information transfer. All of these took away manual labour burdens, were force multipliers and created the need for more brainpower based jobs to take advantage of the technology. Successive labour revolutions may have lulled us into a sense that this is a universal fact, not something that is true, until it isn't.

There is a great debate raging about the nature of intelligence and if LLMs are intelligent but let's put that to the side for a minute and assume that they are intelligent and that they are getting smarter at an accelerated rate. Can we reason about how that will affect human labour?

If all thought labour can be digitized, then economic incentives mean that the CEO will be compelled to replace all of their staff with AI, and the board will be incentivised to replace the CEO with AI. Right now this may sound a bit far-fetched but when we look at fully digital companies like WhatsApp, there were only 55 employees when it sold to Facebook for $19 billion and it had grown to serving hundreds of millions of users with just 13 people. Let that sink in, just 13 people were able to service the instant messaging needs of hundreds of millions of people. While not AI, it’s a great demonstration of the force multiplier that technology can be. As AI gets smarter, businesses of all sizes will need to embrace it to get more efficient or be overtaken by a competitor that is leaner. Taken to its logical extreme, we end up with capital owners but no need for human workers.

There has long been a refrain that jobs requiring the human touch will be the last to go but, recent results have been surprising:

- The share of U.S. adults who say they would feel comfortable using an AI chatbot in place of a human therapist rose from 20% in late 2022 to 34% by mid‑2024. That’s roughly a 70% relative jump in just 18 months. Among adults under 35, comfort now tops 50%.

- 85% of U.S. college and high-school students said ChatGPT’s tutoring was more effective than their human tutor

- Zendesk CX Trends 2025: 8 in 10 consumers are now comfortable with AI handling simple service tasks; 43% said they are excited about interacting with GenAI.

These sorts of results by themselves aren't iron clad proof that we prefer AI for jobs with a human touch but this is the worst AI will ever be, and people are already saying, “I'd rather interact with an AI, thank you.” This is only likely to swing ever more in AI’s favour as it improves too.

So if we take a world with abundant cheap superhuman intelligence, what jobs are left for us?

Well currently robotics seems to be lagging behind our ability to create AI. There are a huge number of manual jobs like strawberry picking that people can do better than machines as our fine motor control is far superior as well as our sense of the environment we are in. We are also better at dealing with manual jobs that have a high degree of variation, such as: plumbing, carpentry, warehouse stocking in non standard environments.

All other things being equal this would create enormous downward pressure, pushing people into low paid manual labour, where AI micro-manages our every move. Think of something like an Amazon warehouse but worse. Luckily advanced humanoid robots are fast improving. If we take some of the stats from Tesla Optimus and assume it had human-equivalent AI but still had its current limitation of moving about a third of the speed of a person and that it would just take minimal breaks to recharge, it’ll be far more cost effective than a person on minimum wage and would be even more cost effective than a person if their pay merely consisted of food!

This last point is actually good for us as a species, as it means that neither greedy elites nor world dominating AI will be incentivised to enslave humans to do cheap manual labour, huzzah! (We have to celebrate the small victories, after all)

So summing up that cheery look into a future with human level AI, it seems very likely that humans won’t be a necessary part of the means of production or provision of services at all. I’m sure there will still be an artisanal market for humans, by humans, but these will be luxury alternatives rather than necessities.

On the Lex Fridman podcast, Sam Altman said it's not a case of “what percentage of jobs AI can do” but letting people do their jobs “at a higher level of abstraction.“ However, looking at it from the perspective above, it’s safe to say that if we have cheap abundant human-level intelligence and robotics, it does indeed burn the house down, at least as far as the promise of new and better jobs is concerned.

Therefore we can say with confidence that better technology does not inevitably lead to better, higher-paying jobs.

Subscribe for free to receive new posts

Subscribe

A Technology Lens on the Last 20 Years of Employment

We don’t have to remain stuck in the realms of thought experiment. Technology leading to worse jobs isn’t hypothetical; we have strong evidence of it right now. Economists typically blame the past 20 years of wage stagnation on the financial crisis and myriad other factors. They rarely focus on the 2007 release of the iPhone, which ushered in the smartphone era and ubiquitous, anytime-anywhere internet. This unlocked the gig economy, including delivery apps, taxis and other jobs where workers can pick up small tasks on the go. The explosion in mobile phones was a huge unlock for the third world to get online, enabling many more remote workers to compete for jobs with western workers.

The gig economy has reduced hourly wages 30% vs their traditional employment counterparts, with fewer protections and increased job insecurity. Due to globalisation, accountants, HR managers, marketing specialists, and lawyers have lost ground relative to skilled trades like carpenters and plumbers. After adjusting for CPIH inflation over the past 25 years, their median pay is now roughly 22% lower than skilled trades.

Another significant factor in wage stagnation and worsening job quality is the flattening of senior roles:

Decades ago, accountants typically had teams of clerks, bookkeepers and other roles to service just a few dozen clients at most. Last year accountants reported being able to service on average 24% more clients due to advances in technology and AI than the year before. This is part of an ongoing trend stretching back many years. Now single accountants are servicing hundreds of clients and are less reliant on supporting roles. To compound the issue, the growth rate in clients per accountant is accelerating.

Benchmarks show lawyers are generating 75% more billable hours than a decade ago. This is in spite of stats showing lawyers spend 48% of their time on administrative tasks and since 1993 we see a 51% reduction in secretarial and related roles. Senior roles are expected to be far more productive while relying on fewer supporting staff to carry out their function.

Not long ago, producing a newspaper or magazine was labor-intensive, requiring teams of typographers, layout artists, and print specialists for each page. Today, a single writer or editor with a desktop publishing suite can do the entire job. As one account notes, “It once took several teams of copy boys and typesetters to do the job that one person does today with a Mac.” In other words, one individual can now handle writing, editing, layout, and publication preparation, whereas it used to demand multiple specialized roles. Similarly, tasks like fact-checking, research, and distribution have been accelerated by online tools. The net effect is that a small media team (or a lone content creator) can publish and reach an audience that would have required a large organization in the past.

With senior roles doing more of the job themselves with the aid of AI and other tools, it’s becoming harder for graduates to get a foothold in industry. A record 23% of Harvard MBAs were still unemployed after months of job hunting.

Taken together, the smartphone era, ubiquitous global internet, Web 2.0, SaaS, and now AI, while undoubtedly better technologies, have clearly not led to better jobs for western workers. Quite the contrary.

Looking forward, with intelligent AI, western workers won’t be competing with remote workers doing the job for a third of the price, but instead with AI for a marginal cost over the price of electricity. This is likely to create similar downward pressures but taken to a whole new extreme.

Copywriters and other writing professions have struggled since the release of ChatGPT. UK job ads in the Media & Communications family, which includes copywriters, content writers and journalists were ‑48 % below the pre‑pandemic baseline by June 2025 and pay is down 5.2%. What this means is that the lower end of the market has been eliminated as people self-serve with ChatGPT. If all else stayed still that should mean we’d see the average wage go up, as the experts with an edge over the AI generated content that remain would have been at the higher end of the wage pool but as these people are competing with essentially free, even though the requisite quality bar went way up excluding many people from the profession, the wages have fallen for those experts.

More recent models have started to become quite proficient at simpler programming tasks and have been fast improving. This has been reflected in U.S. software‑developer job ads, which have fallen over 51 % in a year and ‑70 % since early 2023. Anecdotally I know one of our customers saw that with AI, per coder output had increased but they only had a limited amount of coding work they needed per year, so they cut their coding team by 50%.

Customer service was one of the first roles targeted by AI SaaS alternatives. In a 2024‑25 survey of 697 companies globally, 56% of contact‑centre operators trimmed new hiring and 37 % laid‑off staff after rolling out conversational AI. What made customer service an easy target was that it generally requires fewer integrations and a lower level of prompt engineering to automate. Our K-Chat chatbot for Shopify, which has more integrations to handle detailed queries about order tracking, stock levels, and products, is reducing customer support costs by as much as 88% compared to the ~30% being reported for less integrated chatbots.

The AI industry lingo for such LLM powered systems that combine integrations, processes and domain knowledge is ‘AI Agents’. Y Combinator, the famous start-up accelerator that has produced the likes of AirBnb, Coinbase, Reddit and Stripe to name a few has dubbed 2025 “The year of AI Agents”. We should expect to see an explosion of these verticalised agents that can replace tasks and roles in the near future. To quote YC Partner Jared Friedman, “Vertical AI agents could be 10x bigger than SaaS. Every SaaS company builds some software a team uses; the vertical‑agent equivalent is the software plus the people.” HappyRobot, a voice AI freight broker, managed to grow revenue 30x in just 12 months, which is phenomenal growth and is just one of many start-ups showing the truth of these claims.

I would say that the above is clear evidence that the rate of technological automation is accelerating, AI is a big driver of that acceleration, covering more tasks and roles. What we see is that more output is expected, all with fewer to no support staff to assist and wages have been stagnating. Workers also have less autonomy. In 2007 bank branch staff could override a loan decision; now it’s simply 'Computer says no.'

LLMs aren’t the only AI technology threatening sharp rises in unemployment. There are a number of competitors in the full self-driving space that have been making rapid progress and rolling out in more and more places. Waymo alone is operating over 250k driverless taxi rides per week in 4 U.S. cities, this is more trips than the entire Phoenix bus system. In the U.S. transportation of people or goods accounts for 7.4% of all jobs. Roughly $194 billion a year is spent on truck driver salaries. Gatik is already making inroads here, having done more than 600k autonomous truck runs with a 100% safety record.

Pessimists on the state of current AI and its near term potential advancements will claim that it’s all a huge bubble, AI isn’t that smart, these companies will all amount to a giant waste of investment capital and jobs will be largely unaffected. Gartner recently claimed that 40% of Agentic AI projects will be scrapped by 2027. Senior analyst Anushree Verma expanded, “Current models do not have the maturity and agency to autonomously achieve complex business goals or follow nuanced instructions over time”. A point of interest for people like me that have been tracking Gartner claims for a while now, is that they are essentially saying they expect 60% of current projects to be successful, when in 2018 they said to expect only 15% of AI projects to be successful. So while it sounds negative, they’re actually taking an increasingly positive view on AI. That said, without pointing fingers, there are many high-profile AI Agent projects where publicly released information suggests their approaches are unlikely to succeed, so undoubtedly there will be some amount of bubble popping.

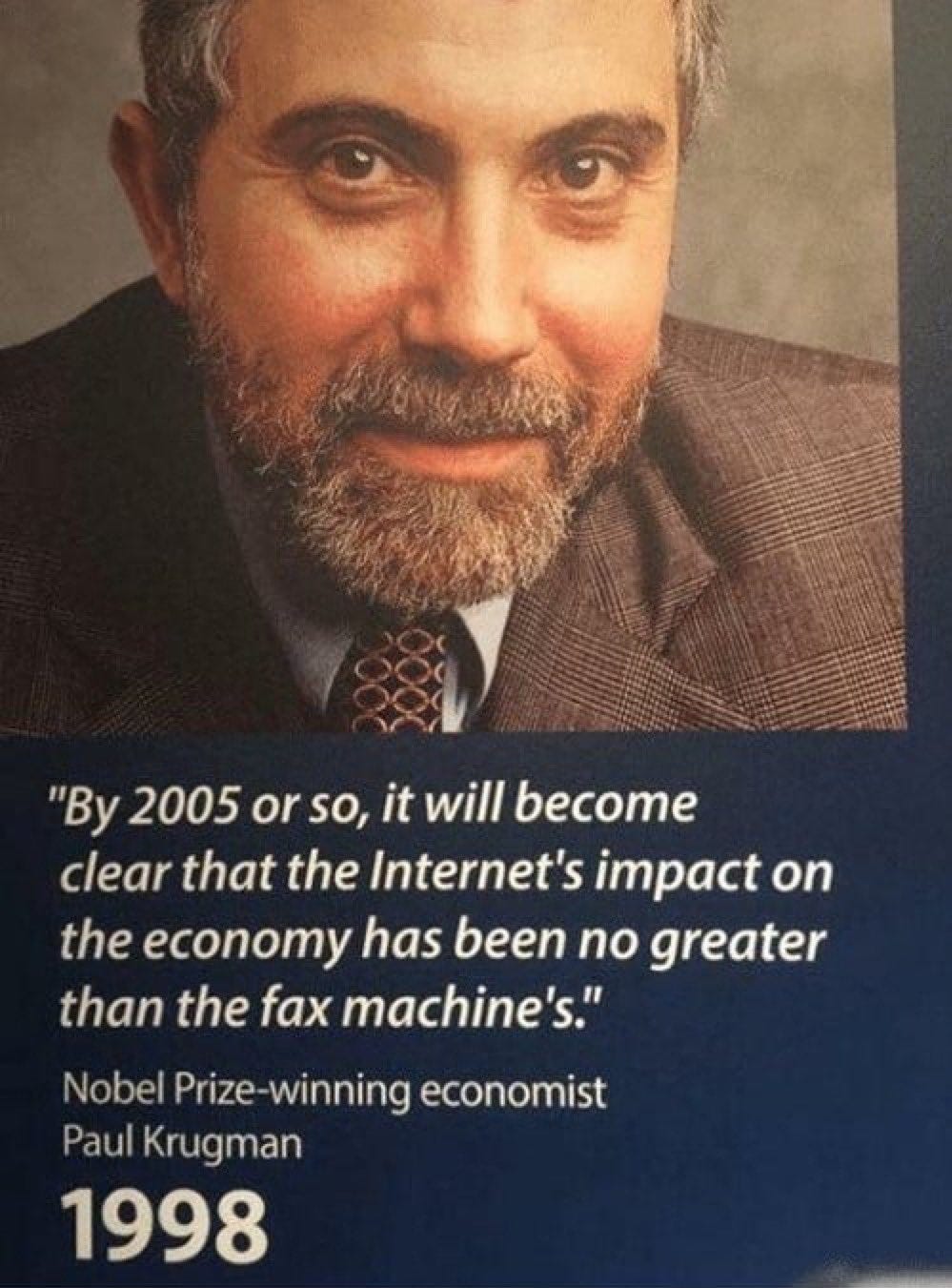

The trick is not to extrapolate too much from that data. In 1998 when Amazon was already doing $610 million annual revenue, Nobel Prize winning economist Paul Krugman predicted that the internet would have no more impact on the economy than the fax machine.

But is AI actually smart?

The Gartner analyst I just quoted said current models are too limited to do real work. The big assumption at the heart of my thought experiment above, which AI critics will jump up and down pointing to is “assuming we have human-level AI”. They'll frustratedly point out that beyond mechanical proofs, AI has not proved anything new in maths or science. It’s not creating new knowledge.

They'll then usually say something like: sure it gets impressive results on the SATs, Bar exam and myriad other tests, but LLMs can't reason. It didn't do well on the ARC AGI exam, and even a 6 year old can handle most of those questions. (ARC AGI is able to generate novel IQ test like pattern recognition questions)

The first set of critiques about being able to make new scientific or maths breakthroughs is true, they're not that smart yet, but let's face it, most jobs don't require that level of smarts and new models are getting better all the time. The second critique was true of the original ChatGPT but recently o3 scored 87.5% on the ARC AGI test and the previous, extremely poor results were mainly a trick of picking on the weakness of previous models at visual reasoning compared to text reasoning anyway.

ARC AGI benchmark performance improvement over the last year, from o1 to o3

For most industry data tasks, current LLMs are on par with or exceed human performance. But – and there is a huge but here – currently they have low task coherence over large numbers of tokens, or in simple terms, they lose their train of thought a lot. When you put too much data in they start focusing on the wrong things, get stuck in strange behaviour loops and generally act dumb in ways humans generally don't. The implication for work is that if you get a browser-operating LLM and assign it some relatively simple white-collar work, it will go off the rails before long.

This is how we end up with an LLM that can in one request answer maths Olympiad questions most mortals could never dream of understanding and in the next request fail to follow a simple instruction like “for the tenth time, stop telling me about Julius. I'm asking about Augustus Caesar.“

LLMs are smart and useful in a lot of ways we care about but they are machines. They don't think like us and present a whole new set of challenges in getting them to work effectively that humans do not. What AI has going for it is that once you solve the problem for your AI Agent, you can create as many parallel AI Agents as you want, with the same problem solved.

So I do agree with the Gartner analyst to some degree about the ability of current LLMs to follow instructions over time to achieve complex business goals. However, that’s only if we’re trying to use the raw model or a light wrapper on the base model on a long or complex task. Currently to overcome these limitations we have to very carefully wrap the raw models in custom code, break down problems so we can let LLMs reason over small amounts of context or create specialised LLMs that deal with specific parts of inputs and do all of these specialisms for every task we want them to do. I did an explainer on how to create AI Agents last year that has more detail on how to overcome these limitations if you are keen to get under the hood but the key takeaway is that they are smart enough for many jobs right now. It’s just that it will take a few years to build out all of the scaffolding to enable current LLMs to take over or vastly enhance a specific role.

This is the work the cohort of YC companies are racing to do across many verticals right now and YC isn’t the only start-up accelerator in town; this is a story that’s being repeated in many accelerators and many fortune 500s globally right now. UBS predicts global AI spending to rise 60% to $360 billion this year. It’s the gap between the LLMs being released and the few years it takes to specialize an AI Agent for a vertical that has led many commentators to think AI has underdelivered. However, the tide of AI Agents is rising and the first few have already reached shore.

Crucially if it takes fewer than 4 years to create an AI Agent to take on a given role, then it’s likely that we’ll be able to create AI Agents to fill new roles as fast or faster than we could re-train people into new roles. So even if we do create new jobs, for a lot of those jobs at least, it will likely be AI doing those jobs too.

Shortening the timeline

The world is frightfully unprepared for automation at this pace and the level of job disruption it’s likely to bring. The challenge is that this pace is very likely to accelerate.

The two main points of comparison for me between LLM versions are ability to reason and task coherence.

As reasoning improves, it’s better able to operate on data within a given context aka apply intelligence to the data at hand and not just regurgitate facts. This is effectively the ceiling of the level of intelligence the model is capable of. This is something I’ve been paying very close attention to and if you’d like to go deeper, you can see my explanation of model reasoning and my analysis of o1, which scored 75% on my reasoning test. In the 7 months since, even better models have been released, and o3 scored a hair under 94% in high compute mode! The rate of improvement has been incredible and if it continues on this trajectory will surpass the smartest humans in a couple of years.

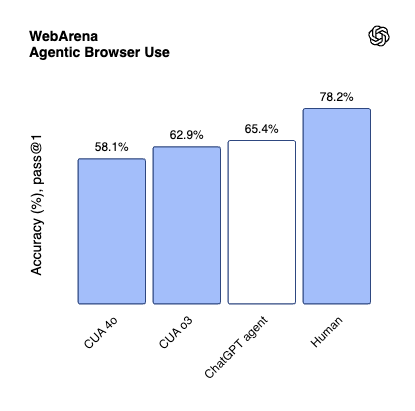

The other major dimension I’m paying attention to is task coherence. This is the ability for LLMs to pay attention to a large number of instructions and data, over a large number of steps, without losing track of what they are supposed to be doing. Again this is improving leaps and bounds. WebArena a benchmark, that’s designed to test an LLMs abilities to complete tasks in a browser like:

“Create an efficient itinerary to visit all of Pittsburgh’s art museums with minimal driving distance starting from Schenley Park, then record the order in my awesome-northeast-us-travel repository.”

or

“Tell me the name of the customer who has the most cancellations in our store’s history.”

Essentially real world tasks that people complete in a browser, was released in mid 2023 with a state of the art score of 14.4%. Now, just 2 years later, ChatGPT Agent is scoring 65.8%.

This is an insane rate of improvement. The human baseline is 78.2% and was created with a number of Computer Science Undergrads from a top ranked university, so proficient computer users. The limitations were that they could take a max of 30 actions, though over half the human errors were from misinterpreting the constraints.

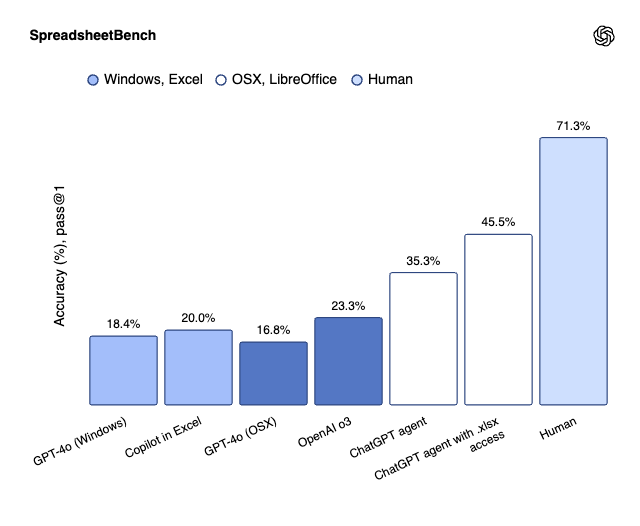

Another benchmark that shows progress on multi-step, job-like computer use is SpreadsheetBench, with tasks like:

“On Sheet1 replace any value in column F that is more than two standard deviations above the mean of its row with the text ‘Anomaly’, and highlight those cells yellow. Assume the sheet can have between 5 and 20 000 rows.”

This benchmark evaluates models on their ability to edit spreadsheets derived from real-world scenarios. Here ChatGPT Agent scored 45.5%, where the human score was 71.3%. For the human evaluators they hired university graduates and `experts in Excel`.

This benchmark is a better proxy for real work, as the tasks are harder and there was no constraint on the number of actions they could take. Getting less than 50% of the tasks correct is sackable for most jobs but it’s not hard to see that in a couple of generations, LLMs could be high performing on these tasks and indeed and capable of doing long complex work-like tasks in the browser independently, without careful specialised AI Agent construction.

This is an example of OpenAI’s ChatGPT Agent doing a complex task

That last sentence is very important. As we noted above it’s currently taking 3-5 years building vertically specialised AI Agents, to fully take advantage of the current LLM capabilities. If we have smart models with high task coherence that can use browsers or computers independently, then it would just be a case of an employer saying ‘here’s the training docs and videos, please read them and then use the computer to do the job.’ No special integrations, no custom coding, just tell it what to do, as you would a person and let it get on with it. It will switch between Outlook, Notion, ZenDesk or whatever other tools it needs to use, all by itself.

If we don’t reach some sort of LLM capability plateau and what I’m hearing from the research labs of OpenAI and Anthropic is, they were a little worried that we’ve maxed out pre-training but we’re just getting started tapping into agentic reasoning and what can be accomplished there. They’re also getting more serious about tackling long running tasks which quite simply hadn’t been a focus, so we should expect more leaps there too.

The timelines I’m hearing from these labs to create these computer operating AI Agents with enough reasoning capability and task coherence to be capable of doing a huge amount of current jobs is mostly in the range of 6 months to 2 years. For full human capable AI their proposed timelines tend to range from 6 months to 2030. This does seem to track with the performance and capability increases we’re seeing as new models are released and as yet we can’t see any major barriers that stand in the way.

A lot of people in the major LLM companies, who are the deepest experts on this tech, seem to believe full, smartest-human-level intelligence is only 2-5 years away, depending on how bullish they are.

That said, people who work on LLMs tend to be biased towards thinking that LLMs are the answer. Other notable AI proponents like Yann Lecun disagree. Yann believes that we will need a very different architecture from LLMs for human-level intelligence; he’s an advocate for research in the JEPA architecture rather than LLMs. Personally I think that for a conscious AI, we probably will need an architecture more akin to Yann’s proposal. Yet I, and even the creators of the LLM neural network architecture, have been incredibly surprised by what they’ve coined ‘the unreasonable effectiveness’ of LLMs. We did not expect the techniques we use in LLMs to be able to produce the level of intelligence we see them display now, yet here they are and there is still a lot of research exploration to be done and they are getting better at a rapid pace, so who knows, maybe LLMs are all we need to get to superhuman intelligence and possibly even consciousness, there are still a lot of open questions.

Though from where we are now non-conscious LLMs capable of following a process, using a computer and independently doing a lot of lower complexity, computer use jobs seems highly probable and could be here in as little as a year.

If we do drastically increase the task coherence of LLMs on long running tasks, overnight the 10% of U.S. jobs that are in low complexity office admin and back office processing could be redundant. For countries like the Philippines, it could be as much as 20% of jobs. This only considers jobs that could be fully automated. How many jobs will become, say, 70-80% automated, allowing the best workers to serve more people, raising the bar for that job role, pushing others out and lowering wages.

On a personal level, if I could push a button and pause progress for a few years till we’re better prepared, I would. I’m actually quite worried about how drastically unprepared we are for all this as a society and the speed at which it could all happen, even with the current level of AI capability. So this is not a fever-dream of someone trying to wish things into existence that are not.

If the models keep improving at the current rate linearly over time and we don’t run into any big blockers, we get computer operating agents capable of learning and doing jobs from training materials in the next few years. We don’t just have the worryingly rapid job automation from the horde of vertical AI Agents in the previous section. We have an army of online computer workers capable of doing a broad range of white collar work, that would all suddenly come online at the same time overnight. The only real limitation is the compute power to run them. This might help you understand why Sam Altman is out there trying to raise $7 trillion to build more chips, he’s fully aware and wants to proceed at full speed.

Is it bad and can we stop it?

Dear reader, I hope you’ll agree with the thrust of the arguments so far:

- New tech does not inevitably lead to new better jobs, even if that’s always seemed true so far.

- Evidence suggests we might need to rethink the idea of jobs that are safe from AI.

- AI is already pretty smart and it’s here.

- The delay in people feeling the job impact of the current level of AI is because it takes a while to specialize and integrate into real work, but it’s happening and set to accelerate.

- Potentially as much as 40%+ of white collar jobs could be lost to AI over the next decade.

- The models are only going to get smarter and might within a year or two suddenly unleash a wave of AI workers that make a huge number of people redundant overnight.

So with this clear and present threat to many people’s way of making a living, it might seem logical to think ‘why don’t we just stop or at least pause for a while?’ A petition led by Elon Musk, Yoshua Bengio, Geoffrey Hinton, Steve Wozniak and over 30,000 others, sought to do exactly that.

As much as I myself would create a global pause if I could, I don’t think China or Russia would agree or at least if they did, I wouldn’t trust them to actually stop. Given this, it would be incredibly risky to pause development and risk falling behind. The first countries to release this technology will have a huge economic advantage leading into the next phase of industry and as much as Western democracy is showing its flaws, it still seems vastly preferable to the other options.

We are also unsure whether super-intelligent AI will have what’s known as a ‘hard take off’, where we finally create AI that can improve itself and suddenly it creates a runaway chain of self improvement that gives the nation that creates it an insurmountable advantage. The latest LLMs are capable of self improvement under human guidance.

Unless we navigate the next few years very carefully, we could end up with unprecedented wealth inequality. Where for the first time in history, the owners of the means of production are not dependent on an army of complicit people working within the system to help them maintain their status. If we think of the worst rulers in history, such as Hitler, Stalin, Mao and their ilk, they still needed a nation of people to largely cooperate, to carry out their wishes. With AI and robotics, a mad king could keep an entire nation enslaved with no other human collaborators, allowing so far unimaginable evil to be enacted.

AI potentially rising up and taking over has been well explored in movies and literature but seems less likely as an immediate threat given we don’t have self motivated, conscious AI yet and no clear timeline to get it. What has been less explored is what happens if we have non-conscious superhuman level intelligence and prompting or training it to “Wipe out humanity and take over the world” is easier for it to do than “Protect and service humanity’s wishes” and some individual sets AI loose to take over, just to watch the world burn and it outcompetes all of the pro-humanity AIs as it’s an easier task to accomplish. An example of this sort of asymmetry in LLMs right now is that they find it much easier to critique at a more sophisticated level than to create.

However unlikely these hellish scenarios may seem, this will be a unique time in the history of life on Earth. It’s important to mitigate the most extreme potential negative outcomes. All of that said, if we don’t go full Luddite and try to pause AI development, western democracies will likely dominate the AI landscape. If this happens the most likely bad outcome would be that we have an ownership class frozen in time. There is no way for new owners to emerge or social mobility, as human labour is irrelevant thus incredible wealth inequality beyond anything we’ve seen is the new norm. Many would argue it’s already the status quo, so possibly a bad but not terrible outcome. Hence, not pausing development, which would move AI progress underground is the best option, even if it does cause rapid job upheaval.

That’s a quick tour of the potential bad scenarios; now let’s look at the good one. Even if there is still huge wealth inequality, we could create a Post Scarcity society, where robots and AI are able to produce enough food and services to enter an age of abundance. A Post Scarcity society means that nobody wants for the basics. A reasonably high quality and variety of food, education, and shelter are available to everyone at nominal or no cost. All basic needs are met.

This might sound like some sort of communist utopian pipedream but if a reasonable percentage of the population’s labour creates no real value beyond novelty, then we do need a social safety net, as the alternatives are horrific and given it’ll be robots all the way down, it should be cheap enough to produce food, shelter and a good quality of life that it is viable economically. The four main arguments against this are:

- ‘They’ want to keep everyone oppressed, so it won’t actually be a good standard of living

- We will all be horrifically bored and miserable without purpose. People ‘yearn for the mines’

- We can’t afford it

- It will never happen

Examining these arguments in more depth:

‘They’ want to keep everyone oppressed

This idea is not just idle chatter for conspiracy theorists. The average U.S. law firm Partner makes 1,559x per hour what a Cambodian Nike factory worker makes. In other words, for every hour that the lawyer works, they can enjoy 1,559 hours worth of work from other people. If there weren’t a third world and the minimum wage was $15, that would reduce their spending power more than 15-fold. Now technology is a multiplicative force for human labour but fundamentally this is still the heart of the equation, the ultimate underlying currency is human labour.

This is what creates the incentive for the rich to keep the poor poor, and also why we’ve essentially created a world economy where the slave class is out of sight and out of mind in a third world country, working for a pittance, yet enabling our comfortable lifestyles. Human level AI and robotics completely obviates the need for these oppressions and absent that incentive, I hope we can shape a society that can improve everyone’s way of life. There is still the issue of finite resources, so that could be a fly in the ointment but there should be far less incentive than there is now for society to perpetuate an exploited underclass of humans.

The children yearn for the mines

Whenever the idea of a post scarcity society is raised, most people tend to side with the argument that people will struggle to find meaning in their lives, we’ll all be depressed and society will fall apart.

People were making similar arguments when they raised the idea that maybe children shouldn’t work in the mines back in 1842 “It is not true there is nothing else for them to do, but they prefer the pits because they have more freedom there.” The surprising popularity of Minecraft aside, most people would now agree this is crazy talk.

The idea that people are fulfilled now because they have jobs and will be miserable if they don’t have to do these jobs seems laughable when the World Health Organisation listed depression as the main cause of ill health worldwide, right now while people still need gainful employment to provide for themselves and loved ones. When we adjust for both health status and household income, retirees still report the highest mean life‑satisfaction, so they don’t seem to miss their jobs too much.

This should hardly be surprising. We’ve had many nobilities over the centuries that had an underclass doing all of the meaningful work, and many people found meaning in art, sport, literature, science and society. There weren’t hordes of nobility yearning for the mines, by most self reports they were quite content.

If we look at chess, AI is already absurdly better than the best human, yet chess as played by humans is more popular than at any time in the history of humanity. We will still compete with other humans and draw deep satisfaction from it. I believe this will be the same across the range of human endeavour.

We can’t afford it

I’m leaping out of my comfort zone as a technologist here but since 1979 U.S. labour productivity has climbed almost 80%, yet the typical worker’s pay is up barely 17%, a 60‑point gap that’s widened every business cycle. IMF work attributes much of the short‑fall to falling labour shares and rising mark‑ups in “super‑star” firms, meaning owners and executives, not payrolls, pocket the extra output. The same pattern: high productivity, flat median wages, shows up across most advanced economies in OECD decoupling studies.

If we try to plug that leak with higher corporate‑tax rates, the money simply hops borders, evaporates through creative depreciation or goes to an overseas competitor as the overhead makes local options non-competitive. A more durable option is to tax the automation and intangible rents at the point where the revenue is earned from residents, no matter where the server farm or holding company sits, a broader cousin of today’s digital‑services levies and firmly in line with the OECD’s Pillar One plan to re‑allocate taxing rights to market jurisdictions.

America, home to most of the “super-star” firms typically opposes such taxes but as AI progresses and the U.S. government along with others needs more cash to support a social safety net, such options may become more palatable. If a nation can sort out effective taxation that will raise revenue to afford: cheap as well as diversified, low‑cost access to energy, chips, robots and AI, then it should be in a position to think about ‘where to invest?’

It will never happen

During the last two decades of wage stagnation, we’ve seen a trend across the world as people look to more extreme political solutions to buck the status quo and hopefully improve their lot. I think a major challenge is that the real root of the problem and the potential solutions have not really made a dent on the political conversation across many nations.

Andrew Yang is the closest we got. Even offering to give every American $1,000 a month, he didn’t manage to win a single delegate in the Democratic primaries. Still he made it onto 7 of the 11 live TV debates and had a big grass roots following, so it’s not all bad.

My hope is that if people who can see what’s coming clearly get the message out there, then perhaps more politicians will take note and run on platforms like this.

The big challenge is that if we keep getting it wrong and create economic disasters of our own making like Brexit and now The Tariffs, then it gets harder to make something like this work, budget-wise.

Even if $1,000 a month is completely non-viable for now, we need to look at how we smooth people through job transitions and set a path towards increasing the safety net as technology progresses.

Summary

As a teenager, it was my conviction about how wonderful a post-scarcity society would be for humanity that fueled my interest in AI, but in a world where Mr. Clippy was doing absolutely nothing of any use, it was easy to gloss over the transition from gainful employment to AI doing all the work and just focus on the upsides of AI and robots for all.

Even then it was hard to foresee how trade agreements, globalization and technology would all interplay to stagnate wages, which would snowball into an unpredictable political climate where it’s hard for serious economic discourse to gain any traction and thus prepare ourselves for what is to come.

Yet here we are, in the worst case potentially months away from a rapid set of redundancies the likes of which has never been seen before and we’re woefully unprepared.

Even if it takes a decade to fully play out, at the speed politics accomplishes things, we need to be taking urgent action now.

The reflex action might be to pull the handbrake and try to stop the rollercoaster but that just risks getting left behind or worse.

Conversely, if the current trend continues and politics gets less grounded in facts and reality, evidence suggests it will drive people ever more toward populism and authoritarianism, in the hope that some big change fixes everything. In doing so, we risk completely missing the key issues, walking blindly into the AI era without establishing the social safety nets and mechanisms for everyone to share in the wealth. This could create an elite that does not have to answer to the 99.9% who don’t own AI and robotics companies, nor worry much about ensuring everyone shares in the AI upside.

Aside from the fact that AI will keep getting better, I’m not sure what will happen but it seems all any of us can do is try to get as many people thinking about this as possible. We can start the discussions, hopefully catch the eye of politicians or media outlets, and start raising the profile of this conversation ‘What are we doing to help ensure that we all benefit from better technology?’ because whatever we’re doing now, it’s not working!

*Originally posted on substack