Months to minutes with AutoML

How to deliver a high performing AI app in just one day

One day app

To many delivering an AI app in one day sounds incredible, as in not credible and admittedly you might want to add a few more days to add some polish and testing before putting it live. That said, we did exactly this at the FCA Pensions Tech Sprint building an app that could predict pensioner life and financial satisfaction 20 years ahead of time with over 80% accuracy from real data. The app could visualise their spending habits and even text them to intervene. This effort won Kortical the FCA innovation award. What follows is a brief outline of the technology that underpins building a fully working AI app in just one day.

85% of all Artificial Intelligence (AI) projects would fail to deliver value for their CIOs.”

Creating the model

The key factor in shortening months of data-science work to minutes, is AutoML. To illustrate the power of AutoML, we can compare it to producing a great work of art. While we attribute a painting done by a Dutch Master to a single artist, apprentices and students of the artist actually do a lot of the work. The Master will do the outline and charcoal sketching, while the students would fill the painting with color and sketch the backgrounds. Similarly, AutoML allows the data scientist to set the broad outlines and parameters for the task required, and the AI then finds the best possible solution to the problem. It’s like having millions of apprentices working to solve a problem with almost an infinite number of solutions. They do this at machine-level execution speeds, leaving any humans trying to solve the same problem in the dust. The key to an effective AutoML platform is one that gets the right balance between defining the broad brushstrokes in such a way that they are not cumbersome to define and change but also gives the data scientist enough control to create exactly the solution they want.

With traditional taking so long, the sheer amount of time it takes to explore one solution means it’s often hard for a data scientist to effectively step back after taking one avenue and clearly see the wood for the trees. They get so bogged down in the detail of creating the data pipeline, encoding the variables, getting their choice of model to work, defining their test / validation and tuning the model, they lose sight of all the various approaches and options they thought might apply at the start of the process. By letting AI do the dirty work at speed, they can stay focused on the high level, evolving their solution rapidly and monitoring how model performance is affected. While AutoML is a great way to let data professionals that may not be trained in ML or AI produce machine learning models and solutions, more advanced data scientists also get huge advantages in speed, insight and productivity by using AutoML. It’s really like data-science superpowers, no matter what your level.

Zappistore

Zappistore, one of our clients had been working on an NLP problem for 2 years and managed to not only outperform their own models but their competitors and even the human outsourcers in their first day using the Kortical platform. This happened because now they could see the full potential of the different ways of implementing it, they took a step back and applied their domain insight into new ways of creating features for the data they were using, which was key to getting such a great result.

Hopefully with that insight you can see how for the FCA Pensions Tech Sprint we were able to rapidly build a model to test the data’s predictive power. What we wanted to test was whether a pensioner, 20 years later would be satisfied with their financial circumstances. We picked out key financials and spending patterns, these were broken down into categories such as food, housing, alcohol, holidays, gambling and so on. Following from this we built a cut down model that just used the spending habits to predict outcome. The fact that this worked very well proved that spending patterns are key indicators of future financial outlook for pensioners.

Explaining The Model

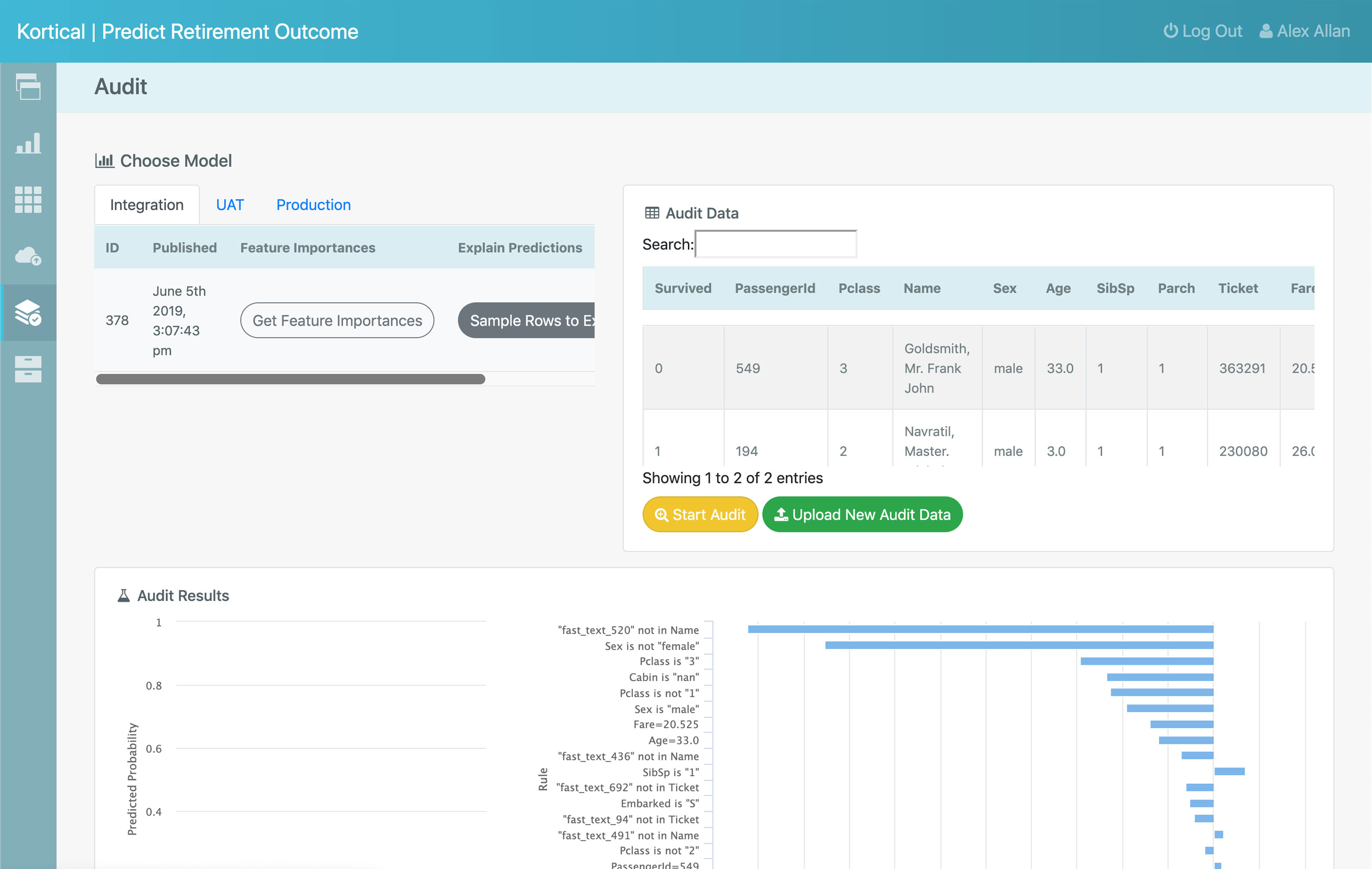

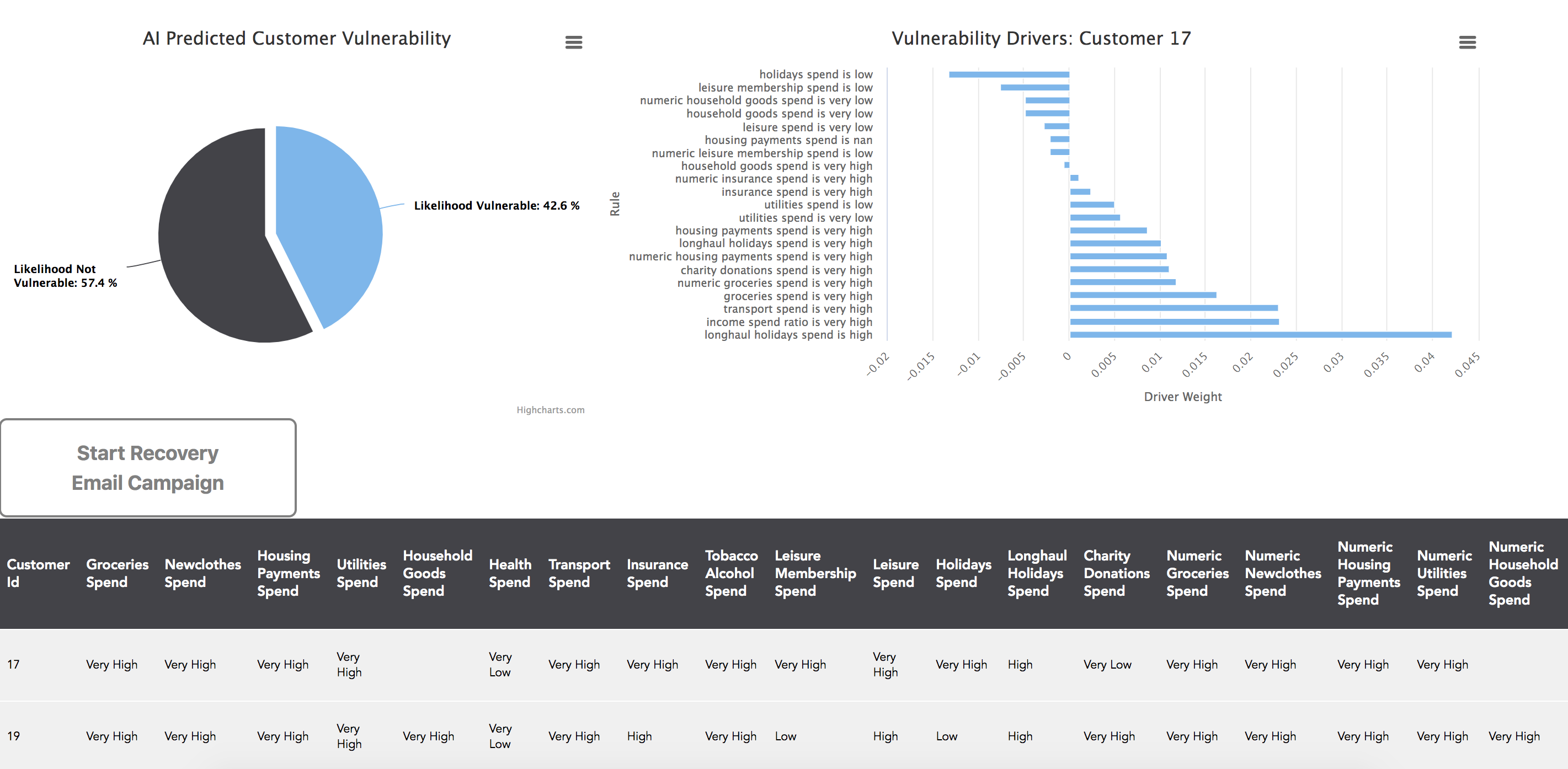

Having created a model that can predict who would experience financial difficulty from the spending patterns, the useful insight is to be able to explain which spending categories are the main drivers for the outcome. This then lets us inform the customer so they can act to change their patterns or see how changes would affect their outlook interactively. Traditionally AI and ML models have been thought of as Black Box technologies. Many platforms also cheat and use a simpler model approximately explain the predictions but there are now techniques that can fully explain the decision making of Deep Neural Networks and other models right down to the individual outcome predicted and what went into that decision.

In the case of the FCA app, the explanation is key to the output, so the need for explainability is obvious but there are a lot of good reasons to want explainable AI for producing any model at speed.

- We can see what data is important and double down on it in the data-science process. In the example above after loading all the data in and doing the first prediction we were able to see that spending habits were a major predictive factor and hone in on that.

- Getting stakeholder buy-in, in any business someone has to sign off on using an AI model. If they can’t understand why and how it makes decisions, they’re going to be less likely to green light the model.

- Removing unwanted bias, is an important factor for any company operating in 2019. Data scientists can experiment to attempt to do this manually but being able to look under the hood at the decision making process for every prediction and show all bias factors saves a lot of time.

Being able to rapidly explain any model created by the AutoML makes for a powerful feedback cycle, that makes iteration and improvements very fast.

Model Management

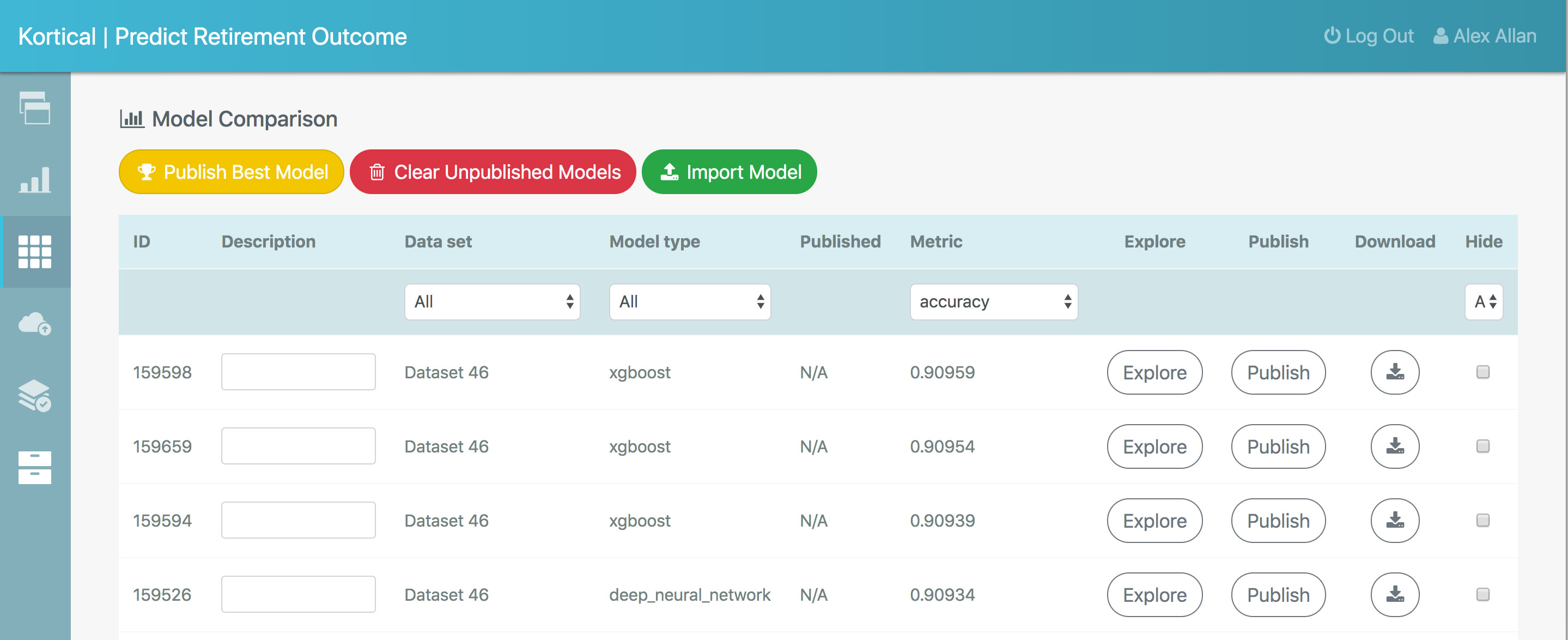

Typically data scientists manage their experiments haphazardly in notebooks or raw code, the very organised might even use something like source control to help track their progress but as in any exploratory process - changes are tested and abandoned regularly while iterating to the best solution. This process is not well catered for by traditional development tools that expect an inexorable march in one direction..

AutoML compounds this problem by creating thousands more models and data pipelines than a data scientist would be able to build manually. As such automated tracking, ranking and comparison of machine learning solutions becomes a hard requirement for any platform that uses AutoML.

Even though the FCA tech sprint was just 24 hours, over 5,000 model solutions were created. If this wasn’t tracked, scored and ranked automatically, it would have taken over 24 hours just to sort through the outputs.

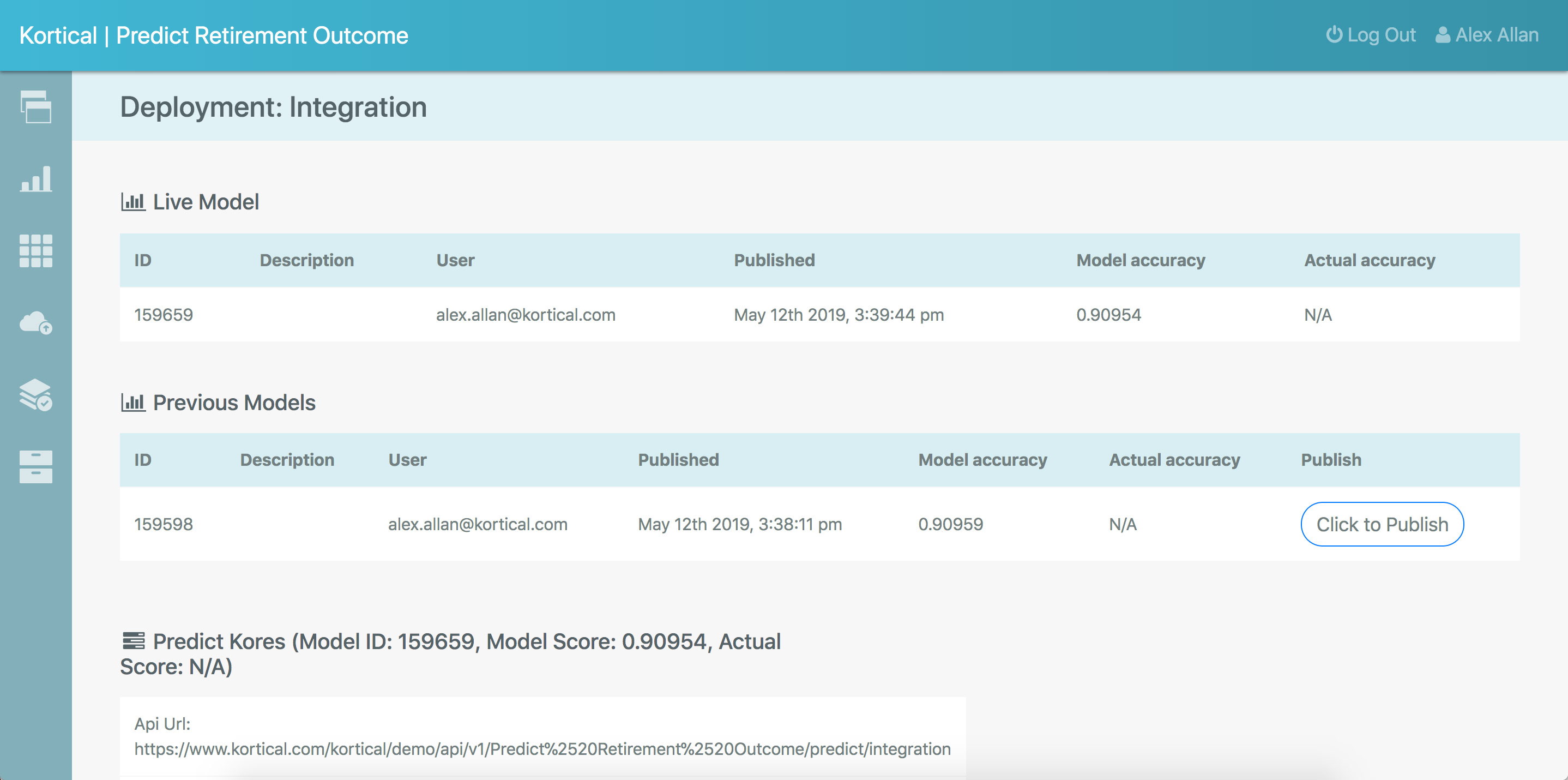

Deploying The Model

As more AI projects are leaving the prototype and pilot stage, industry is just waking up to the fact that creating AI models that meet enterprise level SLAs is a whole different problem to creating a model. The people with the crossover code and data-science skills are generally referred to as data-science engineers. While all the big publications are writing about the shortage of data scientists, data scientists outnumber data-science engineers 100 to 1 according to LinkedIn. While we don’t have full insight into the Gartner stats and where the time is spent on the projects that go years over their expected completion dates, we would not be surprised if a large portion of that time is spent in this phase of the project and we have seen companies take 9 months and fail.

By using a proven platform that has solved these issues, with some of the largest companies in the world using it in production to serve predictions, models can be published in a few clicks and are ready to meet any demand. The REST API makes it very easy for developers to integrate these AI / ML models into apps and services.

As such deploying the models for the tech sprint was only a few seconds work. The fact we had the API from the start meant that app development was able to kick off in parallel to the data-science.

Delivering To Value

This whole article so far has been dedicated to talking about the AI and ML models but people can’t consume AI directly, they need a service or application to provide targeted processing and interfaces for their use case and this means app code. The de-facto standard for writing robust systems that live in the cloud is microservice architectures. Typically in the cloud we expect the hardware we use to fail more than traditional computers but by using microservices we can provide near perfect up-time for our apps and services.

Microservice architectures are another new and relatively difficult skill, that’s hard to hire for. Even for our team that has built several successful microservice based systems, it has taken multiple years each time. Typically though this code is not that different from project to project and the business and interface code tends to be orders of magnitude easier to write and test.

The approach we’ve taken, which we think is the most sensible is to automate the parts that are common to most solutions and hard to do. By providing a robust microservice architecture and API that’s easy to drop custom app code into, it’s easy for frontend and backend developers to create new apps and services.

Because of this for the FCA tech sprint, it was possible for us to create a scalable frontend that took in customer data, processed it, sent it to the backend ML solution APIs to get predictions and return those results to the UI. This app had lots of interesting charts to dissect the data and provide insight to the customer. As well as sending them text alerts to let them know results. This whole solution was delivered in just one day.

Now being totally candid before putting the solution live we would definitely get a designer to do a UI pass, add some testing, etc. but due to the fact that so much of the process of creating the app was automated, there really isn’t that much to test and it shouldn’t take more than a few days.

Conclusion

While everything here is explained in the context of Kortical, this type of technology is very new and I have tried to give insight into why each feature is important and how it impacts a project, to equip the reader to make better buying decisions.

It should also be noted that while all vendors use the terms Deep Neural Networks and AutoML, like the paintings we compared them to, there are all sorts of variation in quality both in terms of model performance, stability and scalability. If we were to recommend a way to tell vendors apart, we’d say look for quality of results with brands you trust.

The data from Gartner paints a very different picture of the timescales and success rates to what we’ve shown here and while this was a prototype for the FCA tech sprint, we’ve seen projects in enterprise go live in just 10 weeks from deploying the platform, delivering +56% revenue for the business area. Projects using Kortical thus far have a 100% success rate, vs the reported 15% industry average. Having gone through the process ourselves, even with an experienced team with the perfect skill-set there is a lot of tooling to get right. We don’t expect every development team to build their own database technology and if they did we’d expect a lot more failed projects, we think the same is true for data-science.

AI acceleration platforms are set to revolutionise the nascent AI industry and Kortical is already proving this across many industries and scales of business from the NHS to small 6 man teams. If you would like to know more please do get in touch.

Get In Touch

Whether you're just starting your AI journey or looking for support in improving your existing delivery capability, please reach out.

By submitting this form, I can confirm I have read and accepted Kortical's privacy policy.